Features

Event deduplication car face

Deduplication of events is a feature that is used to prevent recognising of one person or a car as several different events in ine period of time and simplifies integration with PACS that do not support deduplication.

Note

Deduplication works for events from all connected cameras.

It means that in FindFace Lite you can set a period of time during which Events with the same person or a car will be considered as duplicates and the system will react and process only on the first one and the following duplicating events will be ingnored.

Possible scenarios of duplication

Events with Car can be duplicated if the vehicle stops for a while in front of the barrier or if the rear license plate of a vehicle that has already passed through the barrier is recognized by the exit camera.

Events with Face can be duplicated if the camera fixed the face of the person that has already passed through the PACS who showed face to the camera again for any reason.

More scenarios are described in Findface Lite scenarios article.

How to configure event deduplication

Open the configuration file located in the FFlite -> api_config.yml using one of the editors (e.g.,

nanocommand).Enable the feature by setting the dedup_enabled parameter to true.

Configure the savings settings for duplicates in the save_dedup_event parameter, choose true to save duplicates and false to not to save.

Set the parameters for car and faces recognition separately.

Parameter

Default value

Description

face_dedup_confidence

0.9

Confidence of matching between 2 car or face Events to be considered duplicates.

If matching score is equal or more than set value, Event is labeled as duplicating.

car_dedup_confidence

0.9

face_dedup_interval

5

Time interval in seconds during which Events with the same car or face will be considered as duplicates.

car_dedup_interval

5

Save the changes in the file and close the editor.

Apply new settings by restarting the

api servicewith the following command:

docker compose restart api

Tip

Read the detailed instruction about all configuration settings in the article.

Spam events filtering car face

Spam events filtering is a feature that is used for distinguishing of a real Event to process and so called “spam” Event fixed in the system accidentally.

It means that in the moment of creating an Event from added Camera or sending an Event from the edge device via POST request you can set the image detection area and omit its spamming part.

Possible scenarios of spam events

Events with Car can be spam events if a vehicle is parked near the barrier and the license plate fall into the field of view of the video camera multiple events will be generated.

If the license plate is found in the PACS database, then the barrier will be opened.

Events with Face can be spam events if a person stay near the camera, but is not going to pass through the PACS. If the person is found in the PACS database, the barrier will be opened.

More scenarios are described in Findface Lite scenarios article.

How to configure spam filtering

Settings of capturing frames is configured in the roi parameter, where you have to specify the distance from each frame or image side.

Tip

Currently settings are only available in via API requests

To configure the roi setting for videostreams, use the following requests and the format WxH+X+Y for the roi parameter:

POST /v1/cameras/to create the Camera object.PATCH /v1/cameras/{camera_id}to the update Camera object.

{

"name": "test cam",

"url": "rtmp://example.com/test_cam",

"active": true,

"single_pass": false,

"stream_settings": {

"rot": "",

"play_speed": -1,

"disable_drops": false,

"ffmpeg_format": "",

"ffmpeg_params": [],

"video_transform": "",

"use_stream_timestamp": false,

"start_stream_timestamp": 0,

"detectors": {

"face": {

"roi": "1740x915+76+88", <<-- roi

"jpeg_quality": 95,

"overall_only": false,

"filter_max_size": 8192,

"filter_min_size": 1,

"fullframe_use_png": false,

"filter_min_quality": 0.45,

"fullframe_crop_rot": false,

"track_send_history": false,

"track_miss_interval": 1,

"post_best_track_frame": true,

"post_last_track_frame": false,

"post_first_track_frame": false,

"realtime_post_interval": 1,

"track_overlap_threshold": 0.25,

"track_interpolate_bboxes": true,

"post_best_track_normalize": true,

"track_max_duration_frames": 0,

"realtime_post_every_interval": false,

"realtime_post_first_immediately": false

}

}

}

}

To configure the roi setting for images got form an edge device, use the following request and the format [left, top, right, bottom] for the roi parameter:

POST /v1/events/{object_type}/addto post the Event object.

{

"object_type": "face",

"token": "change_me",

"camera": 2,

"fullframe": "somehash.jpg",

"rotate": true,

"timestamp": "2000-10-31T01:30:00.000-05:00",

"mf_selector": "biggest",

"roi": 15,20,12,14 <<-- roi

}

Liveness face

Liveness is a technology used in CCTV cameras to determine whether the biometric trait presented to the system belongs to a live person or is a spoof attempt such as a photograph, a video, or a mask.

FindFace lite uses passive method of liveness detection, that uses algorithms to analyze various features of a person’s face, such as pupil movement, facial expressions, or the presence of micro-movements to determine if the person is alive.

This method is less invasive than other liveness detection methods and:

it does not require any action from the user. This can lead to higher user acceptance rates and fewer errors due to user discomfort or error;

it is real-time: it can quickly and accurately authenticate a user without causing any delay or disruption to the authentication process.

it does not require any additional hardware or sensors;

it is difficult to spoof than other types of liveness detection, such as those that require the user to perform a specific action.

Possible scenarios of liveness detection

Liveness detection can be used in lots of scenarios, there are several of them:

Banking – Liveness detection may be used in banking to verify the identity of customers.

For example, a customer may be required to present their face to a camera during a video call with a bank representative, and the system can use liveness detection to ensure that the customer is alive and not presenting a fake photo.

Employee Access Control: Liveness detection can be used to control employee access to secure areas in the workplace.

For example, if an employee attempts to enter a secure area by presenting a photograph or a mask, the CCTV camera with liveness detection can deny access and notify the security team.

How to configure liveness

Open the configuration file located in the FFlite -> api_config.yml using one of the editors (e.g.,

nanocommand).Enable the feature by adding the liveness value to the face_features parameter.

Configure the source liveness detection in the liveness_source parameter.

If the detection source is going to be an image (Events will be created via POST /{object_type}/add), set the eapi value.

If the detection source is going to be a videostream (Events will be created in FindFace Lite automatically after recigniton from a videstream), set the vw value.

Save the changes in the file and close the editor.

Apply new settings by restarting the

api servicewith the following command:

docker compose restart api

Tip

Read the detailed instruction about all configuration settings in the article.

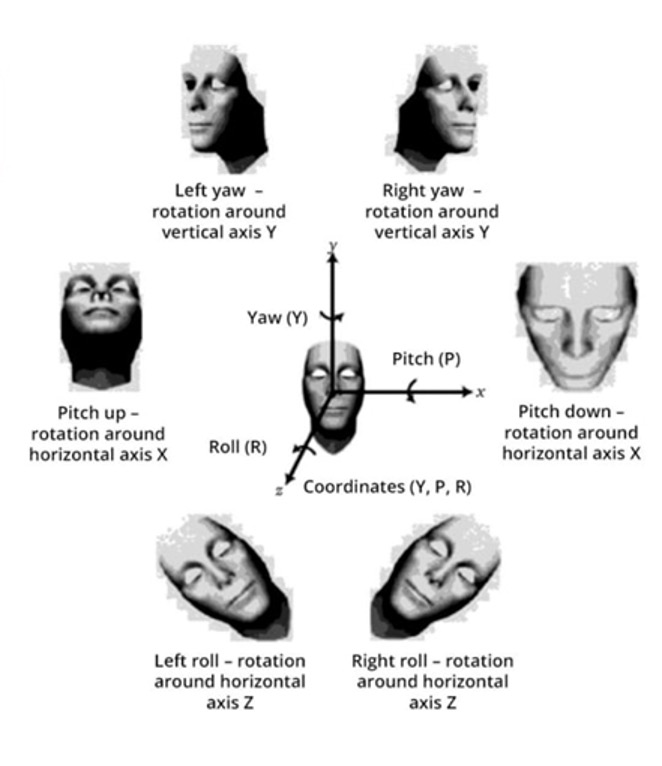

Headpose face

Headpose feature refers to the ability of the camera to detect and track the orientation and movement of a person’s head relative the CCTV camera in real-time.

Warning

The headpose feature does not work if the person wear a medmask.

To detects the headpose the in two-dimensional space the FindFace Lite uses pitch and yaw.

Pitch refers to the rotation of the head around its horizontal axis, which runs from ear to ear. Positive pitch indicates that the head is tilted forward, while negative pitch indicates that the head is tilted backward.

Yaw refers to the rotation of the head around its vertical axis, which runs from top to bottom. Positive yaw indicates that the head is turned to the right, while negative yaw indicates that the head is turned to the left.

Possible scenarios of headpose detection

Headpose detection can be used in various scenarios where face recognition is used to improve accuracy and security, there are several of them:

Improving employee access control systems by ensuring that the face of the employee matches the expected orientation.

It means that if a person stay near the camera, turns the head to the camera, but is not going to pass through the PACS, the access will not be provided, beacause of detected headpose.

Improving the comfort, while using the PACS system. If an employee approaches a security checkpoint at an awkward angle, the CCTV camera with headpose detection can trigger an alert to the access control system to reposition the camera and ensure proper orientation of the face.

How to configure headpose

Open the configuration file located in the FFlite -> api_config.yml using one of the editors (e.g.,

nanocommand).Enable the feature by adding the headpose value to the face_features parameter.

Save the changes in the file and close the editor.

Apply new settings by restarting the

api servicewith the following command:

docker compose restart api

Tip

Read the detailed instruction about all configuration settings in the article.